run inference on llama models on macbook m1

by msx47

[edited ]

Getting Llama

Llama is being distributed on torrents for some time now. The legality is grey so please excersise judgement. The model can be obtained via this GitHub repository. Follow the instructions and download the base models. The download size is around 220GB.

llama.cpp

From their GitHub

Inference of Facebook's LLaMA model in pure C/C++

This project is a C++ implemetation and is a Apple silicon first-class citizen. It uses 4-bit quantization to run the model on a macbook.

This is a new project and is being developed rapidly. I'll update this post if any breaking changes happen.

Setting up

Clone the llama.cpp GitHub repository. Now open this directory in a terminal and run make (must have gcc installed). This will compile the source files and result in 2 executables, main and quantize. We'll leave them for now.

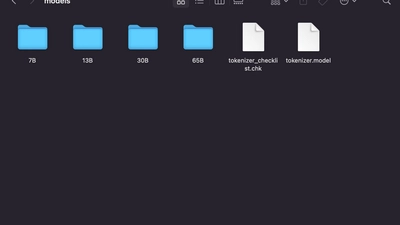

Next up download the llama models and place them inside the models directory in llama.cpp. The llama.cpp/models directory should look like this now.

Now we need to transform the .pth models from the downlaod to valid ggml files. For this we can use the convert-pth-to-ggml.py file. Make sure you have python3 installed using

python3 --version

Now install a few dependencies with pip or conda

For pip use:

pip3 install numpy torch sentencepiece

For conda use:

conda install numpy torch sentencepiece

The llama models has 4 variants of different parameter size. You can choose which one to run based on your RAM size.

| RAM | Model |

|-----|---------------|

| 16G | 7B and 13B |

| 32G | 30B and lower |

| 64G | 65B and lower |

Based on the above table run the following to convert the models to ggml:

python3 convert-pth-to-ggml.py ./models/13B 1

This will take a while (~10-15 mins) depending on the model you selected.

Make the quantize.sh executable with

chmod +x quantize.sh

After the ggml files are generated, run the following command to quantize the models. The argument to quantize script would be the model you transformed to ggml in the previous step.

./quantize.sh 13B

This also might take around 10-15 minutes to complete.

When the quantization is complete you can run the inference by providing it a prompt. To do so enter the following command.

./main -m ./models/13B/ggml-model-q4_0.bin -p "Building a website can be done in 10 simple steps:" -t 8 -n 512

This command will load the model and genrate a response based on your prompt. If you get a segmentation fault or a very slow response here then that is probably due to low RAM.

The project is being worked on currently and will have faster load times for models soon. I'll update this post in case of a major change.